ETHICS, COMPUTING, AND AI | PERSPECTIVES FROM MIT

Robin Wolfe Scheffler

Clues and Caution for AI from the History of Biomedicine

Robin Wolfe Scheffler; photo by Jon Sachs, MIT SHASS Communications

"The very intractability of biology and medicine to computation makes their history an essential counterpoint to more optimistic contemporary discussions of the challenges and opportunities for AI in society. Their past underlines two major points: ‘Quantification is a process of judgment and evaluation, not simple measurement’ and ‘Prediction is not destiny.’”

— Robin Wolfe Scheffler, Leo Marx Career Development Professor in the History and Culture of Science and Technology

SERIES: ETHICS, COMPUTING, AND AI | PERSPECTIVES FROM MIT

The Leo Marx Career Development Professor in the History and Culture of Science and Technology, Robin Wolfe Scheffler is an historian of the modern biological and biomedical sciences. His first book, A Contagious Cause: The American Hunt for Cancer Viruses and the Rise of Molecular Medicine (University of Chicago Press, May 2019), details the history of cancer virus research and its impact on the modern biological sciences. His current research explores whether a formula for success can be discerned from the growth of the biotech industry in Cambridge, Massachusetts. Scheffler holds an appointment in MIT’s Program in Science, Technology, and Society.

• • •

Q: How can the history of science and technology inform our thinking about the benefits, risks, and societal/ethical implications of AI?

To answer, let me start by telling a story from the recent past. In 2014, IBM launched an ambitious program to automate the fraught process of cancer diagnosis and treatment. Watson Oncology, based on the same AI system that had defeated “Jeopardy” champions and chess grand masters, would digest information ranging from patient scans to doctor’s notes and medical literature to produce cancer treatment recommendations both faster and more accurately than teams of oncologists at the world’s best hospitals. However, after several years of use, doctors around the world started to detect persistent problems with the treatments the system recommended.

More than quirks of a machine learning process, these decisions were a matter of life and death for patients undergoing therapy. In 2017, reporters revealed that the much-hyped system actually relied on the ongoing supervision of a team of doctors at just one hospital — Memorial Sloan Kettering Cancer Center in New York—who engaged in a laborious process of “training” the AI on each type of cancer, often substituting their judgement for Watson’s. Rather than creating an efficient decision-making process, IBM’s system had further obscured the important human dimensions of cancer treatment.

Biology and medicine present architects of machine learning and AI systems with their greatest challenges. As unprecedented it might appear at first glance, the application of AI to the biomedical sciences follows upon a much older series of flawed efforts to make biology and medicine quantifiable and computable. The very intractability of biology and medicine to computation makes their history an essential counterpoint to more optimistic contemporary discussions of the challenges and opportunities for AI in society. This experience underlines two points of caution in how we approach contemporary AI projects.

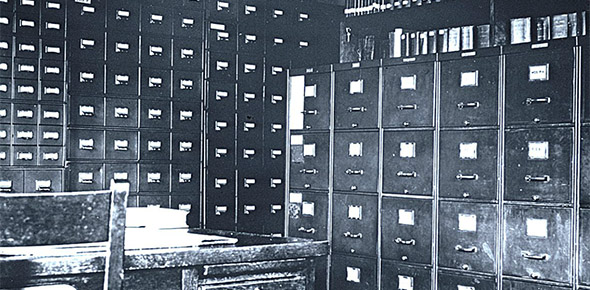

“More data does not always produce better results. The Eugenics Record Office was one of the most intensive early twentieth century efforts to promote investigations of human genetics. Its records, pictured above, deepened rather than corrected flawed assumptions about traits such as intelligence.” (Photo: Archives at the Eugenics Record Office, courtesy of the Pickler Memorial Library, Truman State University)

"Biology and medicine provide ample reason to remember that the process of quantification is always a process of judgment and evaluation regarding what types of information are worth knowing."

— Robin Wolfe Scheffler, Leo Marx Career Development Professor in the History and Culture of Science and Technology

Quantification is a process of judgment and evaluation, not simple measurement

A prerequisite for the application of computation to biology and medicine is a set of quantifiable measures of living processes. Yet many features of human life and health are difficult, if not impossible, to quantify. In our drive for convenient metrics, we have often allowed the processes of measurement to obscure important questions regarding how we value and characterize the living phenomena they aim to represent.

The measurement of “intelligence” illustrates the challenges and dangers of attempting to quantify a complex and multifaceted human characteristic. In the early 20th century, American psychologists drew on European tests of the “mental age” of school children to develop quantitative measures of intelligence. During the First World War, the numeric results of intelligence testing offered the United States armed forces an efficient means of sorting millions of recruits. The fact that these tests employed questions that were easy to phrase but of dubious value for assessing mental ability, such as the properties of different automobile models, did not slow this process.

Soon after the War, advocates of the eugenics movement presented the scores of different populations as immutable racial traits—evidence to support policies ranging from immigration restriction to sterilization. While the heritability of intelligence fostered its own vehement debate, many quickly accepted that IQ scores were in fact synonymous with intelligence — despite numerous reservations regarding what kinds of knowledge and background the testing process actually measured.

Even today, contemporary efforts to use genome-wide association studies to construct intelligence scores commit the same conceptual mistake. While it might be possible to determine correlations between IQ scores and gene combinations, these calculations neglect the deeper question regarding what intelligence is and what kinds of abilities should be valued in society.

Measurement can obscure information

Even where the outcome is less clearly pernicious, the process of quantification can create limits on how we think about problems in public health and medicine. In the 1950s for example, the National Heart Institute turned to the new field of biostatistics to help it analyze the data emerging from a large prospective study of heart disease in the city of Framingham, Massachusetts. While the physical exams conducted initially asked questions about diet, stress, and other habits, statisticians urged doctors to focus on properties, such as blood pressure or cholesterol levels, that were quantifiable and amenable to new and powerful multifactor logistic regression techniques that might help forecast future heart disease.

The idea of establishing individual “risk factors” through calculators that focused on a few easily measured variables emerged as a powerful tool in public health. However, the construction of risk factors in this way also obscured the complex social terrain that surrounds individual disease. In the case of cardiac health, the number of cigarettes an individual smoked could be readily assimilated into risk factor calculations, but other elements of their environment that might impact community health, such as exposure to cigarette advertising or the impact of Congressional subsidies on tobacco pricing, could not.

What types of information are worth knowing?

Biology and medicine provide ample reason to remember that the process of quantification will always entail judgement and evaluation regarding what types of information are worth knowing. The capabilities of computing will always depend on the process of quantification beneath them. Understanding the assumptions built into this process is especially important given the interdisciplinary nature of computation. Scholars arriving from other fields may unwittingly embrace measurement processes that they would not endorse if they had the opportunity to examine them before starting the design of new systems.

Social scientists and human rights activists, such as W.E.B. DuBois (pictured above) pointed out the flaws in insurance data about African American mortality that mistook cause for effect. (Photo of W.E.B. Dubois, ca.1907, public domain).

"In our drive for numeric measurements, we have often allowed the instruments of measurement to obscure important questions about the living phenomena they aim to measure. The measurement of 'intelligence' illustrates the challenges and dangers of attempting to quantify a complex and multifaced human characteristic."

— Robin Wolfe Scheffler, Leo Marx Career Development Professor in the History and Culture of Science and Technology

Prediction is not destiny

Multifactorial regression models like those developed for the Framingham study form the conceptual core of the forecasting processes employed by AI in medicine and other fields. The numeric precision of these models lends their forecasts the force of fact. However, as doctors and biologists have long complained, the basis of these models is rooted in probability, not knowledge of biological or physiological mechanisms. Within these models, the correlation of a few variables with an outcome does not mean that these variables produce the result. This distinction becomes important when the predictions of statistical models about health outcomes—which reflect social as much as biological circumstances—are mistaken for the fate of individuals and social groups.

Calculating the “insurability” of an individual — a prediction of their risk of disease or death — has long drawn advanced data analysis methods. In 1892, Frederick Hoffman, a life insurance actuary, announced in Boston’s Arena magazine that African-Americans were “doomed by excessive mortality.” Theories of African-American racial inferiority were not new but Hoffman substantiated these speculations with reams of data from census reports, public health surveys, military records, and other sources.

Social scientists and civil rights activists, such as W.E.B. DuBois and Kelly Miller, were quick to point out that Hoffman’s analysis mistook cause for effect. These differences were evidence of ongoing harm of poverty and discrimination on African-American health, not proof of racial weakness. Nonetheless, Hoffman soon moved to a position at Prudential Insurance, one of the largest insurance companies in the country. From there, his statistical arguments that high rates of African-American mortality made them uninsurable led Prudential and other life insurance companies to charge African-Americans higher premiums for decades afterwards—or deny coverage entirely. By erecting further barriers to obtaining the health security offered by insurance, Hoffman’s predictions deepened health inequalities.

An understanding of social and political values should guide technical innnovation.

Today, there are legal protections against discrimination in some forms of health insurance, but the danger of reading predictions as individual destiny continues. AI has become a component of forecasting everything from pregnancy to suicide risk by analyzing large datasets encompassing features of our lives such as social media activity, purchase histories, genetic information, and movement patterns. These new systems operate outside the regulatory framework established to prevent health discrimination, giving companies wide discretion in how to use these predictions without examining their deeper assumptions.

While we may regard Hoffman’s conclusions and the proposals of the eugenics movement as racist and incorrect, it does not mean that they cannot happen again. Decisions made on the assumption of algorithmic fairness or statistical objectivity can easily renew existing inequalities by repackaging social disparities as individual traits.

New developments in AI and health have only deepened the social and ethical dilemmas of quantification and computation. Before discussing how AI and computation can solve problems, the history of biology and medicine provides a powerful warning that we should first better understand the nature of the problems we want to solve before we seek to solve them. This presents a set of issues which cannot be resolved through technical innovation, issues that we ignore at our own peril.

Suggested links

Series:

Ethics, Computing and AI | Persectives from MIT

Robin Wolfe Scheffler:

MIT website

MIT Program in Science, Technology and Society

Stories:

Kendall's Key Ingredient

Kendall Square in the 1970s was desolate and seemed an unlikely place to launch a biotech revolution. But the neighborhood had one thing going for it: people. More specifically, proximity to the right people, says MIT historian Robin Wolfe Scheffler.

Scheffler receives 2018 Levitan Prize

Prestigious award in the humanities includes a grant that supports Scheffler's research into the factors that influenced the development of Boston’s booming biotech industry.

Ethics, Computing and AI series prepared by MIT SHASS Communications

Office of Dean Melissa Nobles

MIT School of Humanities, Arts, and Social Sciences

Series Editor and Designer: Emily Hiestand, Communication Director

Series Co-Editor: Kathryn O'Neill, Assoc News Manager, SHASS Communications

Published 18 February 2019